Over the past two weeks, I have been revisiting the mystical realm of Kubernetes, this technology has fascinated me for years. I have followed simple guides in the past that provided mostly automated scripts to quickly deploy a cluster and ran a few kubectl commands to deploy simple applications. In this series of posts, I would like to share my journey of setting up a GitOps-managed(more on this in a bit!) k3s cluster with separate staging and production environments. If you’re looking to get started with a similar setup, I will walk you through some of my architecture decisions, implementation details, and any lessons I learned along the way.

Why k3s and GitOps

Before diving into the technical details, let’s take a look at why I chose k3s and GitOps for my Kubernetes Home Lab. I chose k3s for a few reasons, it is rather quick and simple to deploy, with lots of great documentation out there. It is very lightweight, allowing it to run on my limited resources, and it maintains full compatibility with standard Kubernetes. This makes it great for my learning environment, where I want to play with all of the production grade capabilities, but don’t necessarily need the full horsepower of a production environment, that will be the next project!

As for GitOps, there is just something about a declarative workflow. I have long been a fan of NixOS(You’re welcome for this rabbit hole, see you next year.) for this very reason. Instead of running a handful of kubectl commands, I can type out a manifest of how I want the service to look, push that change to my GitHub and watch the magic happen. By using GitOps principles, every change to our infrastructure is tracked, reviewed, and automatically applied. This gives us an audit trail, easier rollbacks, and a single source of truth for our cluster’s desired state.

Cluster Architecture

Let’s start with my hardware and network setup. Our cluster consists of three nodes:

Control Plane

- A laptop running Ubuntu 24.04 LTS

- 4 cores and 8GB RAM

- 250GB NVMe storage

- Full disk encryption enabled

- IP: 10.0.1.40

Worker Nodes

I have two identical worker nodes(VMs), running on my ProxMox host. Each configured with:

- Ubuntu 24.04 LTS cloud image

- 3 vCPU and 3GB RAM

- 63.5GB OS disk

- 200GB dedicated storage disk mounted at

/var/lib/rancher/k3s/storage

Network Configuration

I chose to keep the networking simple and straight forward, using the k3s defaults:

- Dedicated IP range for k3s Nodes: 10.0.1.40-49

- Default k3s networking using Flannel

- Pod CIDR: 10.42.0.0/16

- Service CIDR: 10.43.0.0/16

GitOps Implementation

The heart of the setup is my GitOps repository structure. I have organized my code to clearly separate different concerns and environments:

*Check out my setup at my GitHub https://github.com/we-r-robots/k3s-gitops

k3s-gitops/

├── apps/ # Application deployments

│ ├── base/ # Base configurations

│ ├── production/ # Production-specific configs

│ └── staging/ # Staging-specific configs

├── clusters/ # Flux-specific configurations

│ ├── production/

│ └── staging/

└── infrastructure/ # Cluster infrastructure

This structure follows best practices, allowing me to:

- Maintain common configurations in the

basedirectory - Apply environment-specific overrides in

productionandstaging - Keep infrastructure components separate from applications

- Manage Flux-specific configurations per cluster

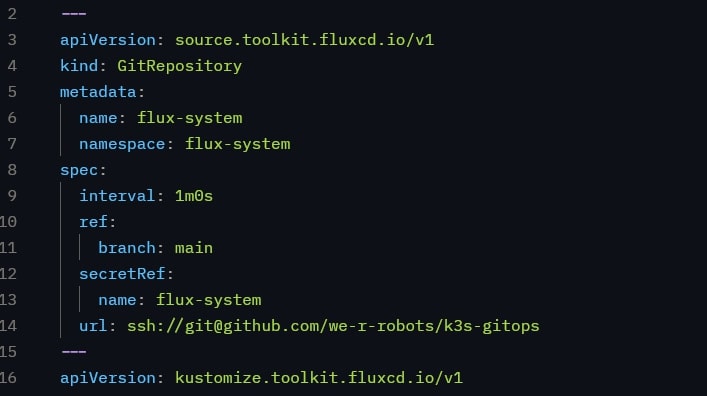

I am using FluxCD to manage my cluster state. Each environment has its own set of Kustomizations(Manifests):

- Flux-System (Core Flux components)

- each cluster environment has it’s own Flux components

- components are bootstrapped into the cluster using FluxCLI

- Apps (Application deployments)

- applications have individual base/staging/prod Kustomizations

- Infrastructure (Shared cluster components)

- cert-management, monitoring, networking, etc

All Kustomizations currently run on a 10-minute sync interval with pruning enabled, ensuring the cluster state stays in sync with the Git repository.

Secret Management

A critical aspect of any environment is secrets management. In this case I have decided to implement a project called External Secrets Operator (ESO) to handle this:

- AWS Secrets Manager will serve as a my back-end secret store

- Kubernetes secrets follow a

kube-*prefix pattern for easy identification and permissions - ESO assumes an IAM role that can only read/write secrets with this prefix

- I have setup a Kubernetes ClusterSecretStore that provides access to secrets cluster wide.

This setup allows me to:

- Keep secrets out of my Git repository(especially since it’s public)

- Manage secrets secrets in a central AWS store for Kubernetes and non-Kubernetes applications

- Automatically sync and rotate secrets to my k3s cluster

Current State and Features

Environment Separation

- Distinct production and staging namespaces

- Independent configuration management

- Proper resource isolation

Infrastructure Components

- CoreDNS for service discovery

- Metrics Server for resource metrics

- Traefik Ingress for incoming traffic

- Local Path Provisioner for storage

- ServiceLB for service load balancing

GitOps Workflow

- All changes tracked in Git

- Automatic synchronization via Flux

- Clear promotion path from staging to production

Lessons Learned

Throughout this implementation, I have learned valuable lessons:

- K.I.S.S. - This motto rings true for me time and time again. I have a tendency, to reach for the end goal before I have even started. Sticking with a simple, easy to deploy k3s cluster allowed me to get started, use my existing equipment, and actually start learning!

- Infrastructure as Code is my spirit animal. It is hard to describe just how satisfying it is to define some blocks of code and then watch all the pieces fall into place to match that desired state. It also makes tracking version history and changes so much easier. Also, having my entire cluster setup synced to my Github allows me to recreate this cluster again and again.(which means I can break things!)

- Finally I am reminded of a tired old sign that used to hang on the wall at a previous employer. The 5 P’s “Proper Planning Prevents Poor Performance” I used to chuckle at that sign, mainly because it ironically had a typo in it, but the philosophy has proven valuable. Taking the time to setup Proper separation of Staging and Production, implementing a robust, scalable, and secure system for managing secrets, among other foundational elements has set this project up for success.

What’s Next?

Monitoring Setup

- Deploying Prometheus, Grafana, and Loki Stack

- Setting up alerts for cluster health

- Monitoring External Secrets sync status

Database

- Implementing PostgreSQL with high availability

- Setting up automated backups

- Configuring external secret integration

Security Enhancements

- Implementing network policies

- Configuring resource quotas

- Setting up RBAC for applications

CI/CD Pipeline

- Setting up GitHub Actions

- Implementing container image building

- Creating promotion workflows

Documentation

- Creating an application on-boarding guide

- Establishing secret management procedures

- Documenting the environment promotion process

Conclusion

Building a k3s cluster and learning about GitOps workflows and best practices has been really fun and challenging, so far. Even though I haven’t deployed a single application, aside from the recommended podinfo web app to verify my cluster was actually working; it feels really good to take it slow, trudge through mountains of documentation, and build a solid foundation for what is to come. The more I dive into declarative workflows like GitOps, the more enamored I become with it. I cannot wait to start on the next steps.

Stay tuned for future posts in this Kubernetes series, I plan to do a deep dive on the specifics of my FluxCD setup, how I connected ESO to my AWS Secrets Manager, and specifics for the upcoming projects like DB management, Monitoring Stacks, and CI/CD pipeline development. Thanks for coming along on this journey with me.